Prompt engineering and Context Engineering: The Complete Developer’s guide to Modern AI System Design

From crafting prompts to architecting intelligent information ecosystems

The AI development landscape has fundamentally shifted from crafting individual prompts to architecting entire information ecosystems. While prompt engineering remains crucial for immediate task optimization, context engineering has emerged as the broader discipline that defines how AI systems access, process, and utilize information at scale. This comprehensive guide explores both approaches, their relationship, and practical implementation strategies for developers building production AI systems.

The foundation: understanding prompt engineering

Prompt engineering is the discipline of designing, optimizing and refining instructions to efficiently guide LLMs toward producing desired outputs. It bridges the gap between human intent and AI capabilities through structured natural language instructions, representing the most direct way developers can influence AI behavior.

Core principles that drive effective prompting

Specificity consistently outperforms vagueness. Clear, detailed instructions yield dramatically better results than ambiguous requests. Context provision supplies relevant background information and constraints while iterative refinement treats prompt engineering as an experimental process requiring continuous testing and optimization. Role definition establishes clear AI personas and behavioral expectations, and explicit output formatting structures desired response format.

Modern prompt engineering operates on a couple of foundational techniques that developers must master.

Zero-shot prompting provides direct task instruction without examples, enabling quick task completion but often lacking consistency.

Few-shot prompting provides examples to guide model behavior, dramatically improving output quality and consistency across similar tasks.

Chain-of-thought prompting guides models through step-by-step reasoning, particularly effective for complex problem-solving and mathematical tasks.

Role prompting establishes AI personas that significantly improve response quality and consistency. System prompts provide fundamental instructions that establish AI behavior across entire conversations, while advanced techniques like self-consistency, three of thought and ReAct (reasoning + action) enable sophisticated problem-solving capabilities.

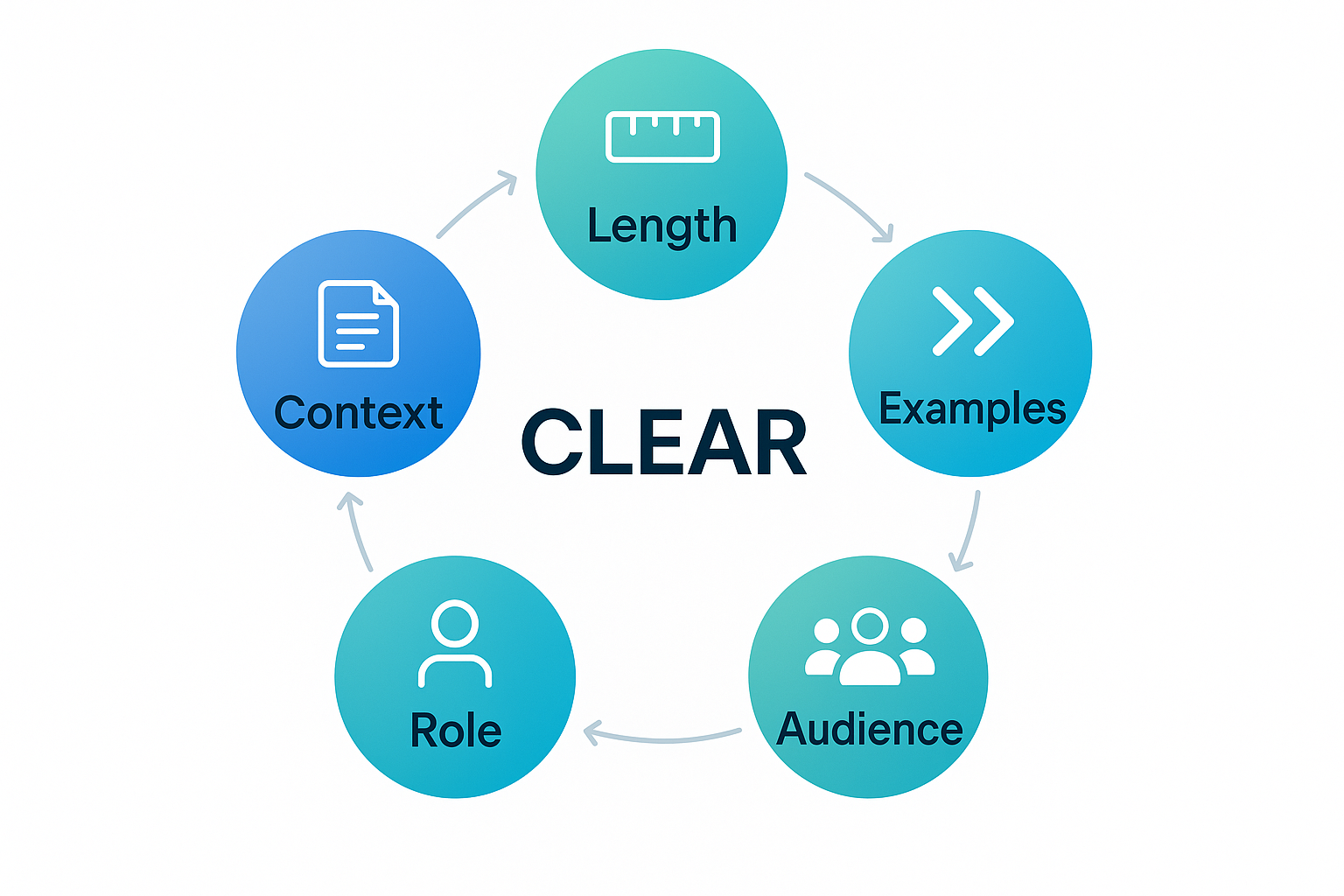

The CLEAR framework provides a structured approach to prompt construction:

Context provides necessary background information

Length specifies desired output length

Examples include relevant demonstrations

Audience defines target users

Role establishes AI persona.

This framework consistently produces higher-quality outptus compared to ad-hoc prompting approaches.

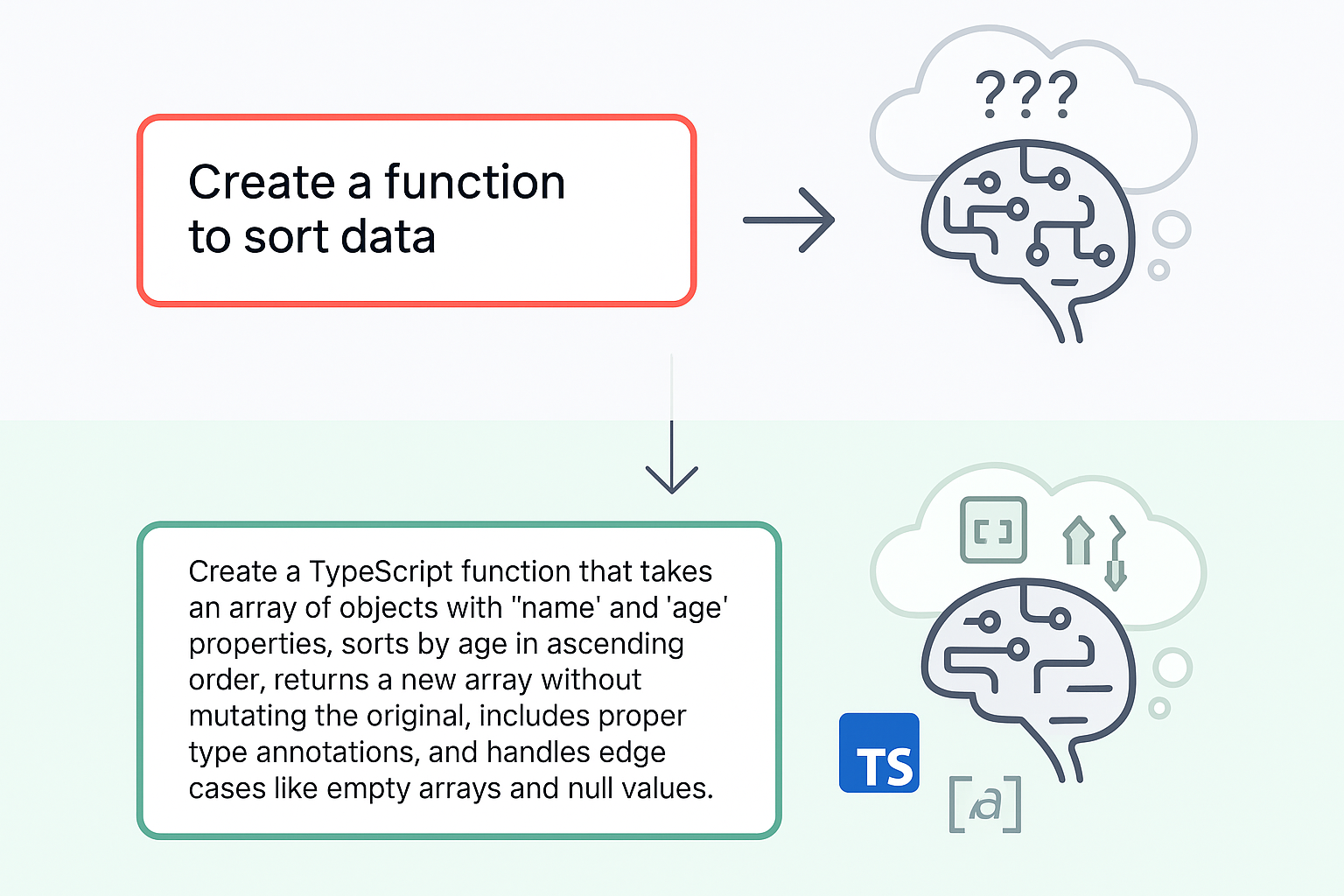

Consider this transformation from basic to more sophisticated prompting. A vague request like “Create a function to sort data” becomes: “Create a TypeScript function that takes an array of objects with ‘name’ and ‘age’ properties, sorts by age in ascending order, returns a new array without mutating the original, includes proper type annotations, and handles edge cases like empty arrays and null values.”

Context engineering: architecting AI information ecosystems

Context engineering represents a fundamental paradigm shift in AI system design, moving beyond individual prompts to encompass entire information ecosystems that surround LLMs. As Andrej Karpathy describes it, “Context engineering is the delicate art and science of filling the context window with just the right information for the next step.”

Defining the scope and architecture

Context engineering designs and builds dynamic systems that provide the right information, tools, and format at the right time, giving LLMs everything needed to accomplish tasks effectively. Unlike prompt engineering’s focus on a single input-output pair, context engineering manages memory, history, tools, and system-wide information flow.

Modern AI systems operate on a two-layer architecture: deterministic context that developers directly control (prompts, documents, system instructions) and probabilistic context that AI agents autonomously find and integrate (web searches, database queries, retrieved documents).

Core concepts enabling dynamic context management

Context windows define the maximum text (measured in tokens) that LLMs can process simultaneously.

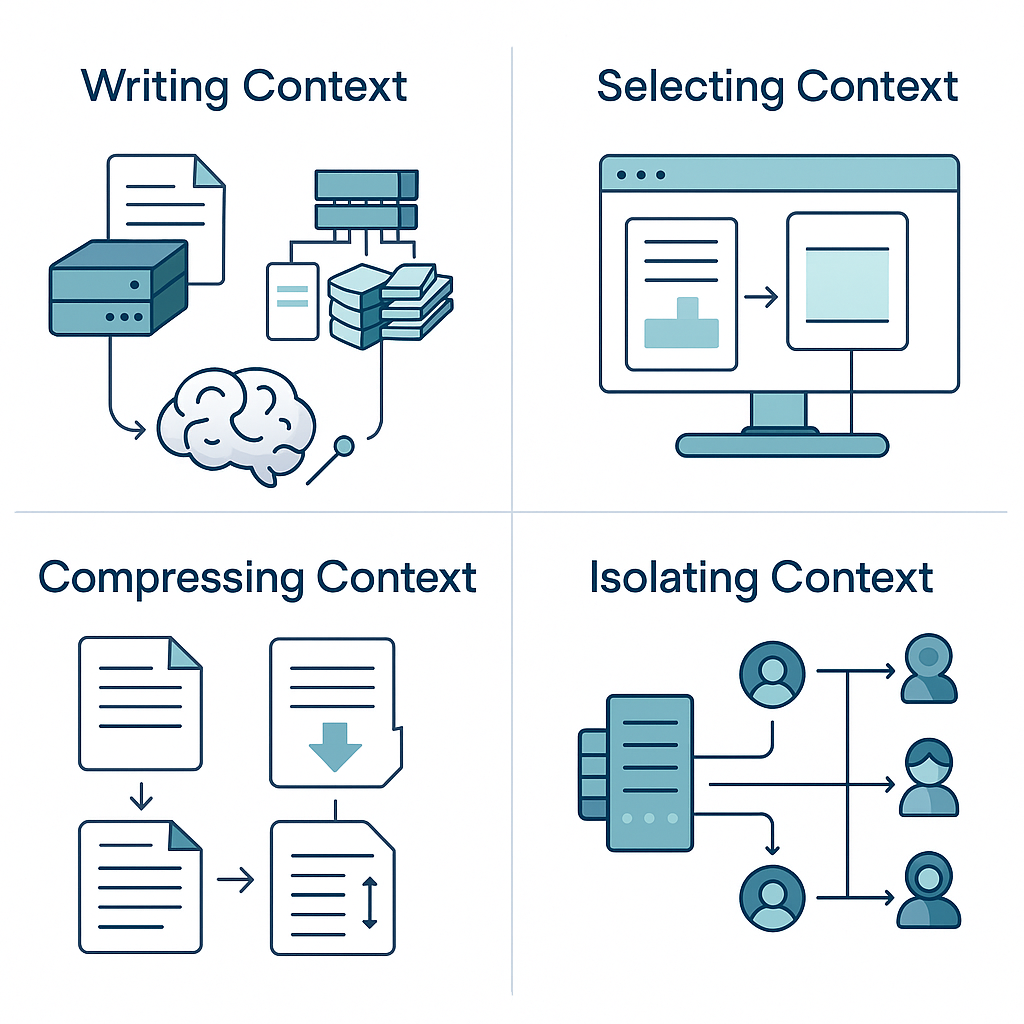

Context management involves four primary strategies:

Writing context saves information outside the context window through scratchpads and memory blocks, selecting context pulls relevant information into the context window, compressing context retains only essential tokens for task completion and isolating context splits complex contexts across multiple specialized agents.

Context injection dynamically inserts relevant information based on user queries, task requirements, historical interactions, retrieved knowledge, and tool outputs. This process distinguishes context engineering from static prompt engineering by enabling response, adaptive AI behavior.

The RAG revolution: retrieval-augmented generation

Retrieval-Augmented Generation serves as a cornerstone of context engineering by combining document storage, intelligent retrieval, and context augmentation. Some more advanced RAG patterns include small-to-big retrieval using small chunks for retrieval but large chunks for synthesis, parent document retrieval that decouples retrieval and synthesis documents, and multi-modal RAG incorporating text, images, and structured data.

Some interesting integration patterns and hybrid approaches

Modern AI systems increasingly combine prompt and context engineering through sophisticated integration patterns.

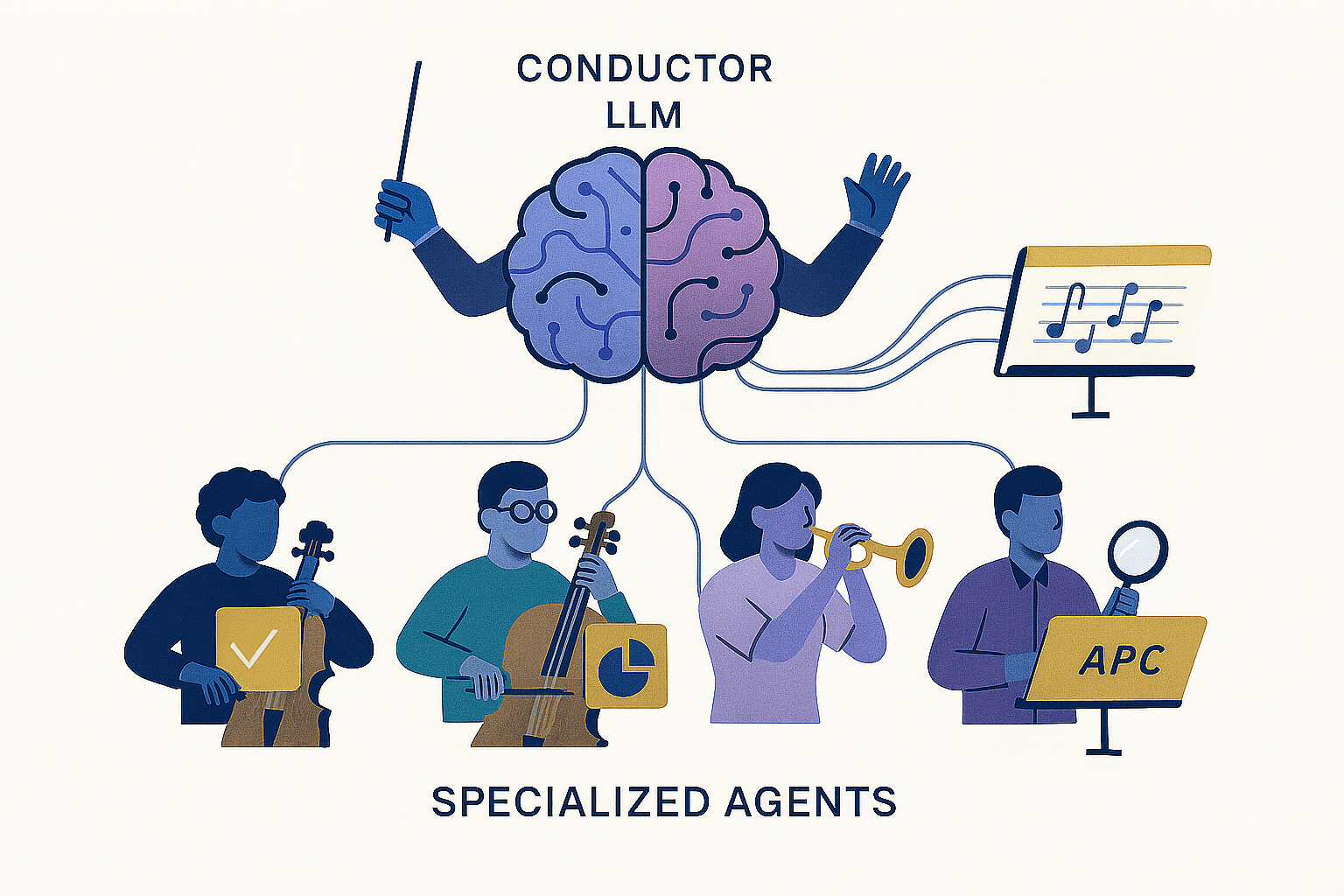

Multi-agent systems use conductor models as LLM-powered orchestrators managing task distribution, specialized agents for particular domains, and seamless API connections to external systems.

An example of this is MCP or Model Context Protocol, released by Anthropic, and which is rapidlby becoming the universal standard for AI-tool integration standardizing connections between AI systems and external data sources.

Conclusion

The evolution from prompt engineering to context engineering represents a fundamental shift in AI system architecture and development practices. While prompt engineering remains crucial for crafting effective instructions, context engineering provides the architectural foundation for creating reliable, scalable and intelligent AI applications that can operate effectively in complex dynamic environments.

The advantage lies in understanding when to apply sophisticated prompting techniques and when to architect comprehensive context ecosystems. The integration of prompt and context engineering, supported by emerging standards like MCP, is creating unprecedented opportunities for AI applications across industries.

Whether you're looking to streamline your prompt workflows, build sophisticated context-aware systems, or access cutting-edge models without infrastructure headaches, Featherless provides the foundation you need to succeed.

Ready to stop wrestling with infrastructure and start building intelligent applications? Start with Featherless today and experience what happens when prompt and context engineering just work.

Have questions about implementing prompt and context engineering in your applications? Reach out to our team via support@featherless.ai or join our community Discord!