Error codes

Client Errors

400 - This Model is Cold / Not Ready for Inference

Compatible model listed in the model catalogue have one of three states: cold, loading, and warm. Warm models are those available for inference, and loaded into GPU at time of inference. Cold models are compatible, but need to be loaded before they can be used for inference. A model’s card reports it’s current status.

Any subscriber may load a model. Time to warm a model depends on a few factors, including model size and system load. Small models can be warmed in as little as 5 minutes. Larger models could take up to an hour.

401 - Unauthenticated

Your API key is not recognized. Confirm if you’ve copied it correctly and/or try creating a new one.

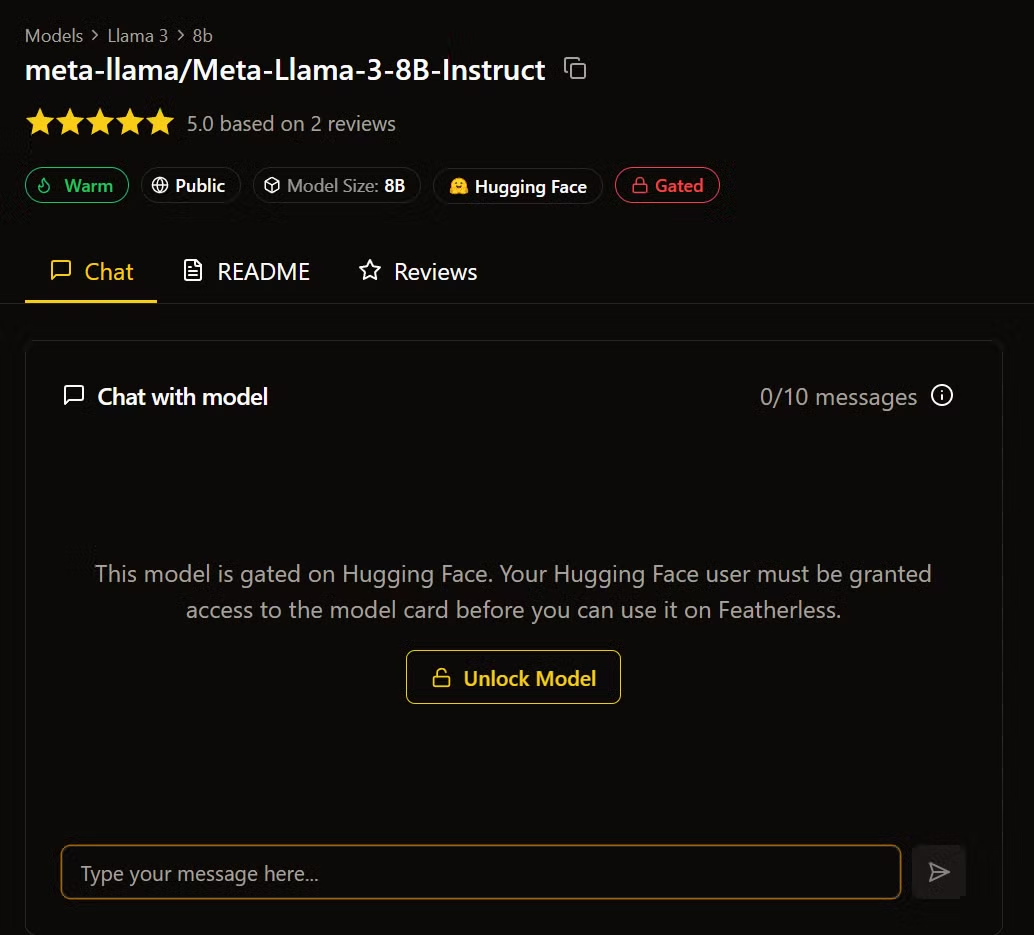

403 - Unauthorized

The model is gated and you need to agree to the model’s license before using it. Refer the the model’s page on the platform to provide proper access. There will be an Unlock Model button as in the image below.

.avif)

Server Errors

500 - Internal Server Error

Something failed on our end. The error is recorded and will be reviewed by staff.

503 - Service Temporarily Unavailable / No valid Executor

A better description of this error is “insufficient model capacity” - i.e. current load is exceeding backend GPUs. In particular, this error doesn’t mean the service is down.

Default should be to retry the same request. If the problem persists past three retries, the message may be accurate and the model class may be temporarily unavailable.